Obius

Native XR audio and music creation instrument

DOUBLE POINT

2022

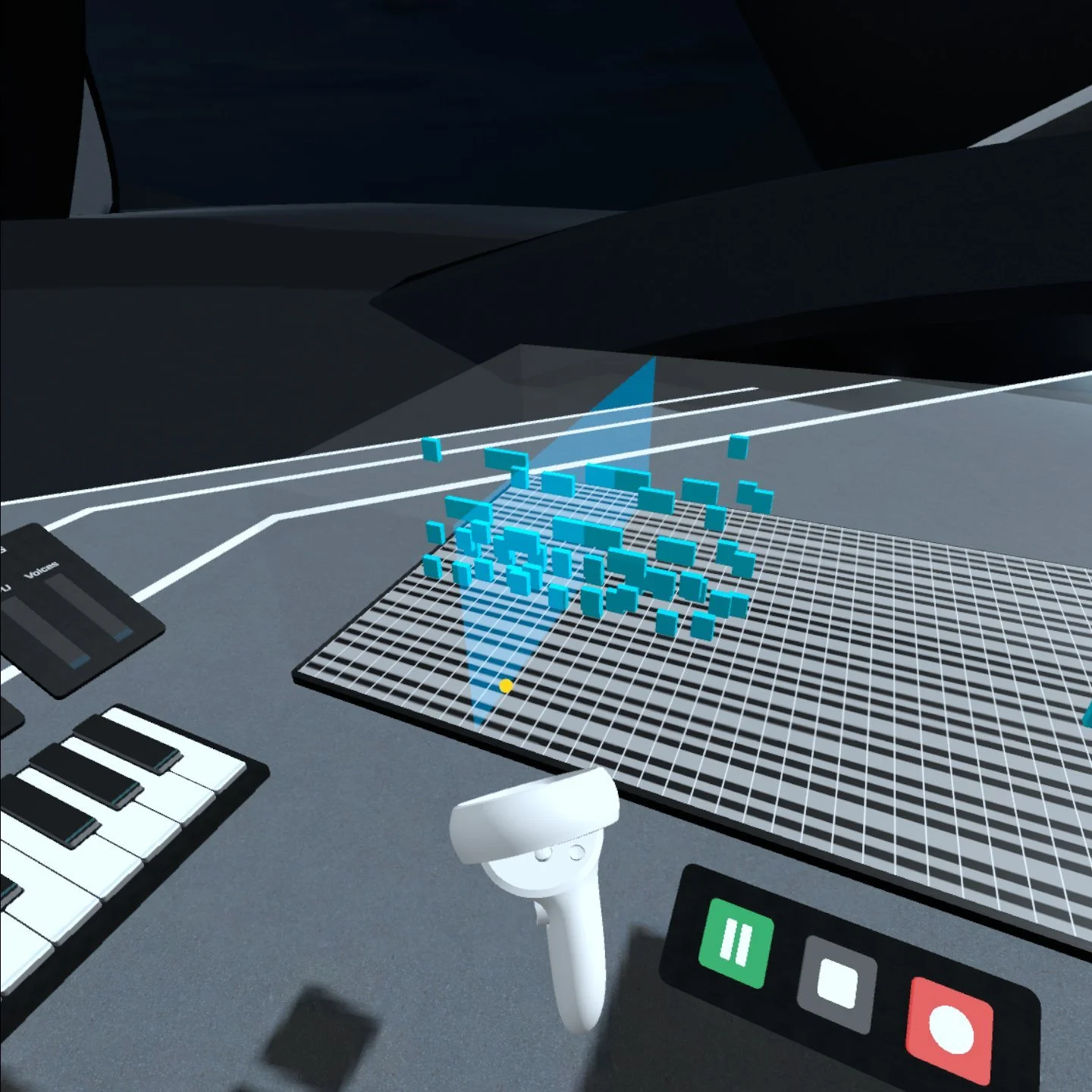

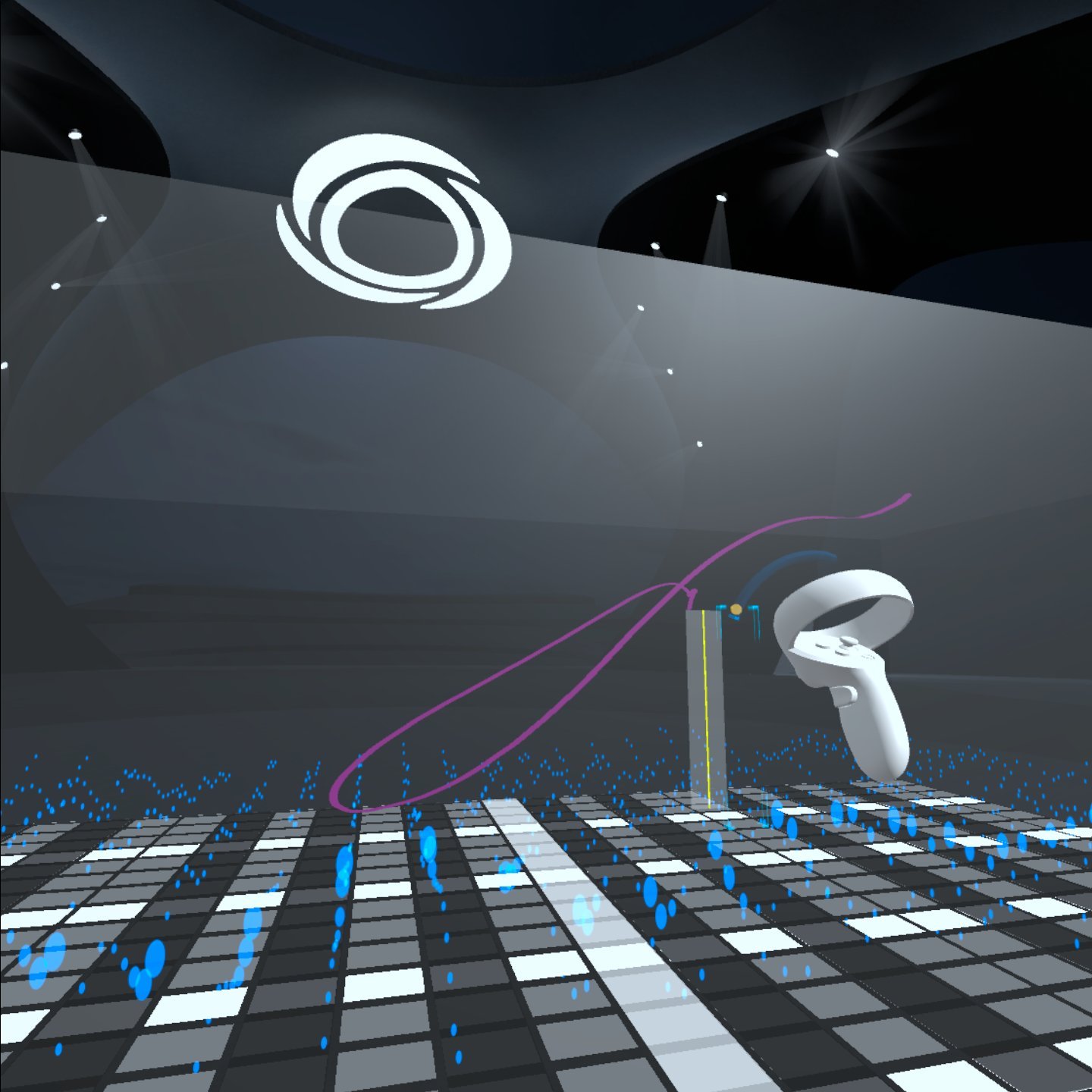

Obius is a native music creation app developed for the Meta Quest, supporting both full VR and mixed reality (pass-through) modes. It enables users to compose music in an immersive 3D environment by placing and drawing sounds in a spatial sequencer. These sequences can then be triggered using a full-size virtual piano keyboard, allowing for real-time performance and experimentation.

The tool supports complex musical layering—users can trigger multiple sequences simultaneously, each loaded with different samples, to build rich, orchestral soundscapes.

XR | Audio creation | Sequencer | Synthesizer | Meta Quest | Unity | Music | FMOD

My role

Lead Developer

Unity / C#

Integrated Meta SDK for XR device support

Integrated FMOD for advanced audio functionality

Led concept development and rapid prototyping

Built a sequencer grid with vertical axis mapped to volume

Created a sound drawing system for placing audio as blocks or freeform synth lines

Developed a hand-following library interaction system for previewing and selecting samples

Built a project management system for saving sequences, collections, and full projects

Implemented an audio timeline with features for BPM adjustment, quantization, looping, recording, scrubbing, and playback

Built a full-scale virtual piano for sequence triggering, with key-based transposition and per-key toggles

Designed a sound-reactive VFX system synced with the timeline

Optimised performance for running natively on Quest 2 and 3

Collaborated with a 3D artist on asset optimization and with a sound designer to set up libraries and effects

Switching between drawing and placing audio samples from the library

Scrubbing the timeline through a recorded song

Adding one shots, and drawn synths to a sequence

Filtering which keys will trigger specific sequences

Switching between VR and MR modes

Selecting sounds by name

Challenges & Solutions

Challenge: Translating the music creation process to XR

The Problem: Physical instruments offer rich tactile feedback that's hard to replicate in virtual environments. XR risks flattening that expressiveness, making digital music tools feel less intuitive or engaging.

My Solution: I designed a spatially-driven sequencer that maps musical parameters to natural 3D hand movements. Rotation controls sound effects, height adjusts volume, and lateral motion shifts pitch - all while drawing in sounds. This approach turns the absence of physical tactility into a strength, making music creation feel embodied and fluid in XR.

More about

Audiospace.io and Obius

Obius on the Meta Quest store : https://www.meta.com/en-gb/experiences/obius/6072486836145332/